Our Solution

As our objective is to deliver an automated solution for detecting oil spills, our focus is on minimizing unnecessary user involvement. Initially, users will specify the geographical area they intend to monitor. Subsequently, our scheduled process will utilize Copernicus’ APIs to retrieve Sentinel-1 SAR imagery. These images, along with relevant metadata, will then be stored in an AWS S3 bucket.

Next, the prediction engine will be activated to conduct pixel-level classification. Upon detecting an oil spill, several actions will be initiated:

- The predicted oil spill area will be highlighted on the original image

- The size of the detected oil spill will be computed

- Notifications via email and SMS will be dispatched to the subscriber

Data Pipeline

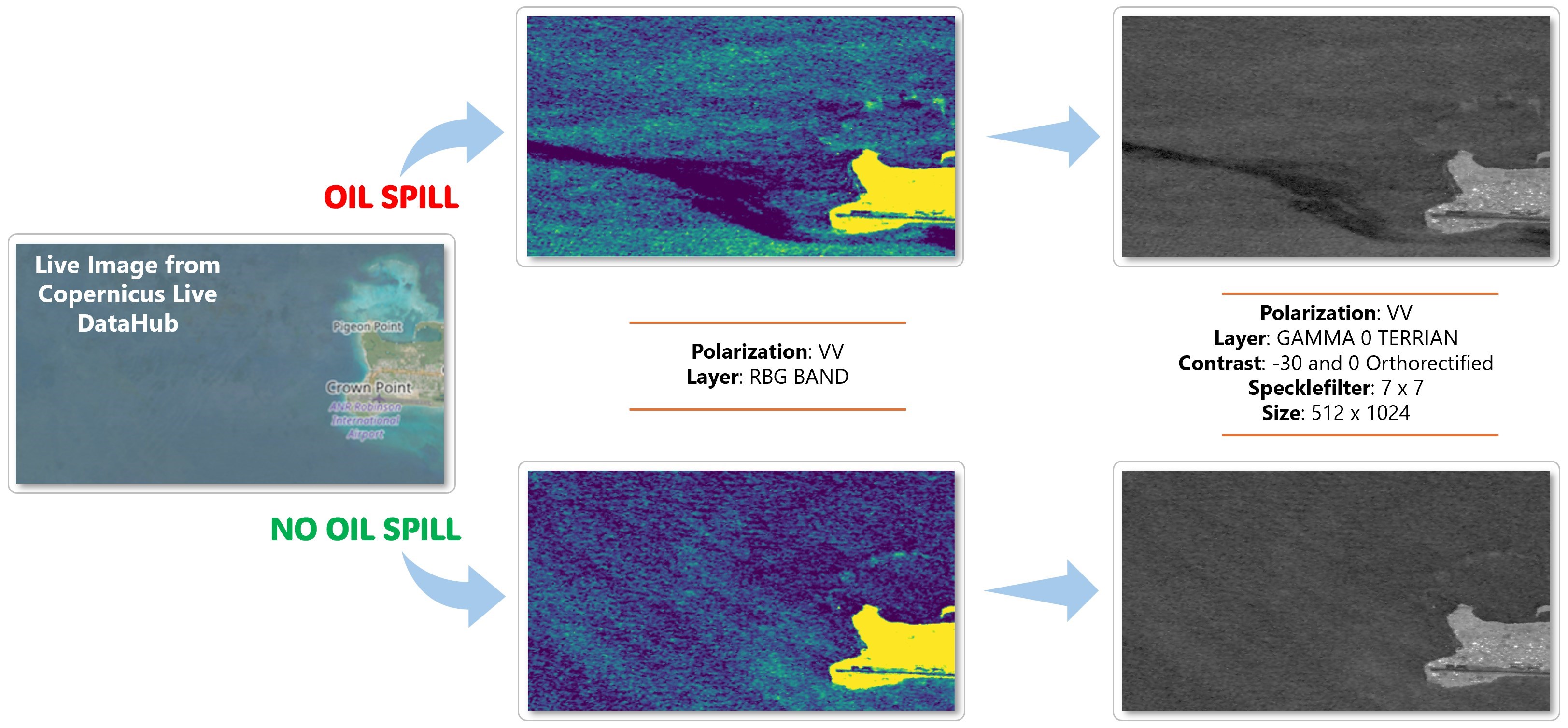

For image preprocessing, we employ the Process API, maintaining a resolution of 10x10 meters per pixel, covering an area of 52.3 square kilometers per image. Leveraging the up-to-date coverage provided by the Sentinel-1 satellite, we perform a daily check on the API to retrieve the latest image data. Following this, we engage in a series of image processing steps aimed at optimizing the image for simplified classification. These steps involve:

- Convert image from RGB color to grayscale.

- Change the polarization to VV band.

- Apply 7 x 7 speckle filter to smooth out the image.

- Perform linear transformation to db (-30 ~ 10) to highlight the contrast.

The ultimate outcome is a refined and clear image, enabling our model to effectively discern the presence of oil spills within it.

Training Dataset

The images sourced from Sentinel-1 SAR data, acquired through the European Space Agency (ESA) database and Copernicus Open Access Hub, provide a comprehensive view of oil pollution incidents spanning from September 28, 2015, to October 31, 2017. These images were collected as part of the study "Oil Spill Identification from Satellite Images Using Deep Neural Networks" conducted by Marios Krestenitis, Georgios Orfanidis, Konstantinos Ioannidis, Konstantinos Avgerinakis, Stefanos Vrochidis, and Ioannis Kompatsiaris. Along with capturing instances of oil spills and related incidents, the dataset includes Ground Truth masks to ensure accuracy in identification and analysis. The geographic coordinates and timestamps of these incidents have been meticulously confirmed through records from the EMSA CleanSeaNet service. This extensive dataset offers a detailed understanding of the spatial and temporal dynamics of oil pollution within the specified timeframe. You can find more details about the study here.

Data Pre-Processing

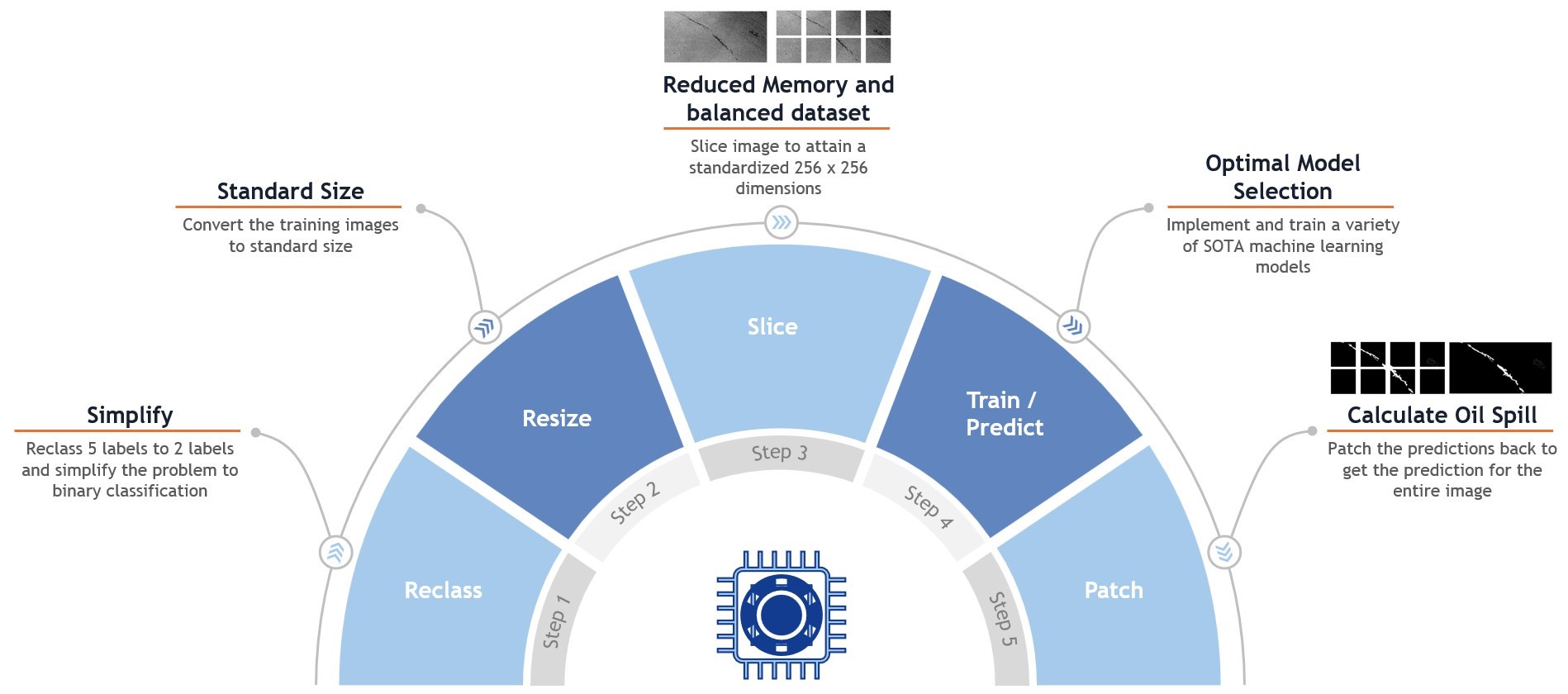

For our model training, we undertook the following data pre-processing measures:

- Convert 5 classes label to 2 classes: The original dataset contains 5 classes, namely sea, land, oil-spill, ship, and look-alike. Since our primary goal is to detect the presence of oil spill, we transform the dataset to binary oil-spill and not oil-spill.

- Resize training image: In order to match the size of live Sentinel-1 images, we resized the original images from 650 x 1260 to 512 x 1024.

- Slice images: Due to our training environment limitation, we sliced the image to 8 256 x 256 pieces and fed them into our model for training. This step significantly reduced the memory needs from > 50GB to ~22GB. Another benefit of doing so is the number of positive images vs. negative images are more balanced.

- Train Model and perform predictions

- Stitch sliced images back: At the end of the prediction process, we stitched the sliced images back for user visualization as well as oil spill area calculation.

Models

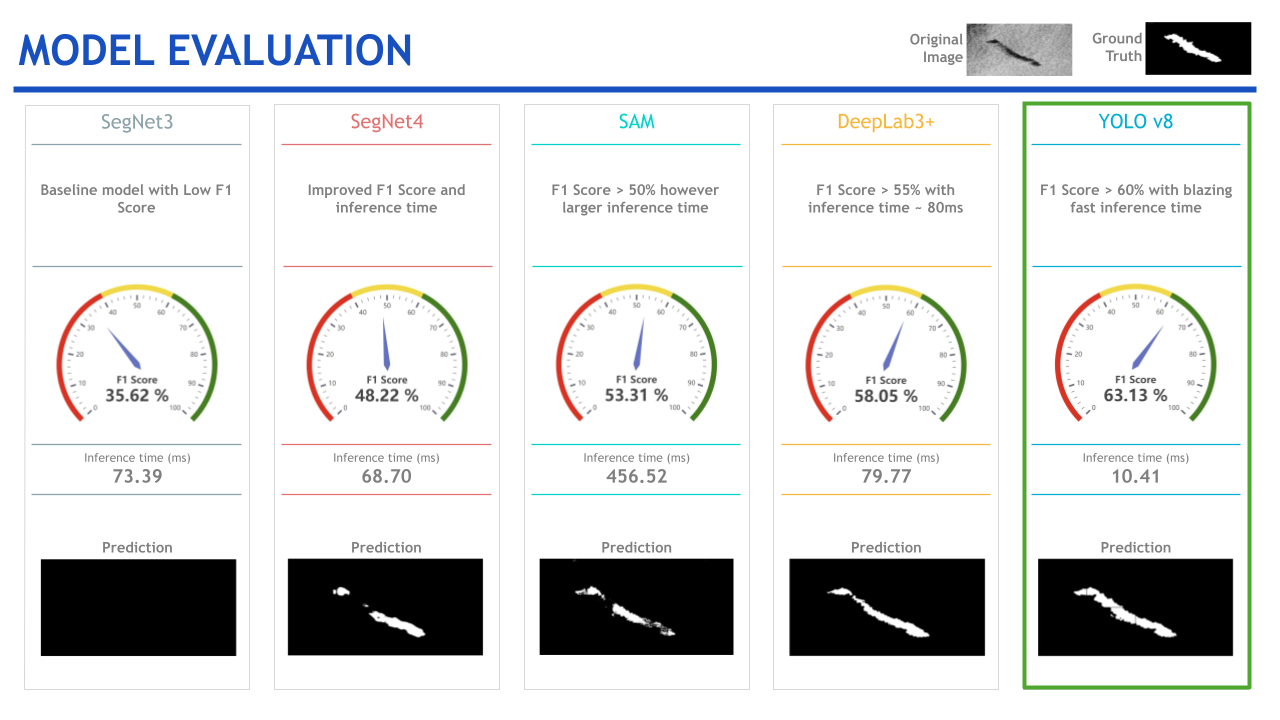

SegNet: CNN architecture for semantic segmentation with encoder-decoder structure and skip connections for improved feature fusion.

DeepLabv3+: Utilizes powerful backbone architectures like ResNet, MobileNet, or Xception, along with ASPP module for effective multi-scale feature capture, allowing input of arbitrary size for smoother segmentation with better efficiency.

YOLOv8: Object detection system performing detection in a single forward pass, predicting bounding boxes and class probabilities directly, treating object detection as a regression problem, and utilizing an S×S grid approach for prediction, now anchor-free.

META's SAM: Prompt-able segmentation system with zero-shot generalization, producing high-quality object masks from input prompts like points or boxes, trained on a large dataset for strong zero-shot performance on various segmentation tasks.

Model Evaluation

Out of all five models assessed, YOLOv8 emerged as the top performer, boasting the highest F1 score (63.13%) —a notable improvement over the next best score achieved by DeepLabv3+ (58.05%). Furthermore, YOLOv8 demonstrated superior inference time, clocking in at 10.41ms, compared to the runner-up Segnet4, which recorded a time of 68.70ms. Consequently, we opted to integrate the YOLOv8 model into our final solution.

Key Learnings & Impact

The most important learning from this project is that data science can indeed improve our lives and protect our planet. When talking to various subject matter experts, we learned that there are no AI solutions in this space, and how severe the damage can be if an oil spill is not detected and contained early on. Even for the countries with plentiful resources, oil spill detection is fairly manual and costly, and such practice is almost impossible to replicate to less resourceful states. Our solution provides an economical way to detect oil spills.

There was quite a bit of a learning curve to retrieve Sentinel-1 SAR images and transform to the format that is suitable for oil spill detection. Through literature review and experiments, we were able to navigate through different options and achieve satisfactory results.

Currently Sentinel-1 satellite provides more frequent coverage for the EU, but less so in other regions. We anticipate our model can be generalized to other satellite service providers and eventually provide a real-time oil spill detection solution everywhere.

Key Contributions

- Productionized automated oil spill detection: OceanWatch accomplishes automated oil spill prediction that is not currently on the market.

- SOTA model exploration: OceanWatch research explored SOTA models that previous research had not yet done.

- Generalized Sentinel-1 SAR oil spill model: Configured Copernicus API to generate data suitable for model to predict.

- Low cost solution: The cost of this service is entirely limited to the cloud cost associated with running it. Additionally, we were able to use the cheapest AWS EC2 hardware due to the speed of our model.

Future Work

Our minimum viable product (MVP) demonstrated that we can indeed provide swift and accurate oil spill detection. We also acknowledged a few directions for future enhancement, including:

- Enhancing Coastline Accuracy (False Positives): Currently, our model occasionally misidentifies coastal patterns as oil spills. To address this, we aim to explore incorporating contextual data, including environmental and geographical features, and apply reward-based reinforcement learning techniques.

- Quantifying Oil Spill Volume: While capturing area is relatively straightforward, calculating volume poses a challenge as it requires a means to measure thickness. Therefore, we aim to investigate innovative techniques such as SAR Interferometry, which involves comparing two images of the same region taken at different times, and passive microwave remote sensing data, providing measurements of sea surface temperatures, water vapor, etc., to attain accurate measurements of oil spill volume.

- SARDet-100K Dataset: On March 11th, 2024, a new benchmark dataset, SARDet-100K, was released, marking the first large-scale multi-class SAR object detection dataset comparable to COCO-level standards. Fine-tuning our models with the SARDet pre-trained weights would be worth exploring.

- SARDet-100K Research Paper MSFA approach: Exploring the proposed Multi-Stage with Filter Augmentation (MSFA) pre-training framework from this paper, which aims to enhance the performance of SAR object detection models, could be a valuable endeavor.

Acknowledgements

We extend our sincere gratitude to our Capstone instructors, Kira Wetzel and Puya Vahabi, whose continuous support and guidance were instrumental throughout this project. Additionally, we wish to express our appreciation to Marios Krestenitis and Konstantinos Ioannidis for providing us with such an accurate and meticulously documented dataset. We are also indebted to the insights generously shared by various subject matter experts, including Andrew Taylor from OPRED's Offshore Inspectorate at the Department of Energy, Security & Net Zero, UK, Neil Chapman from the Maritime and Coastguard Agency, UK, and Jake Russel from Propeller.